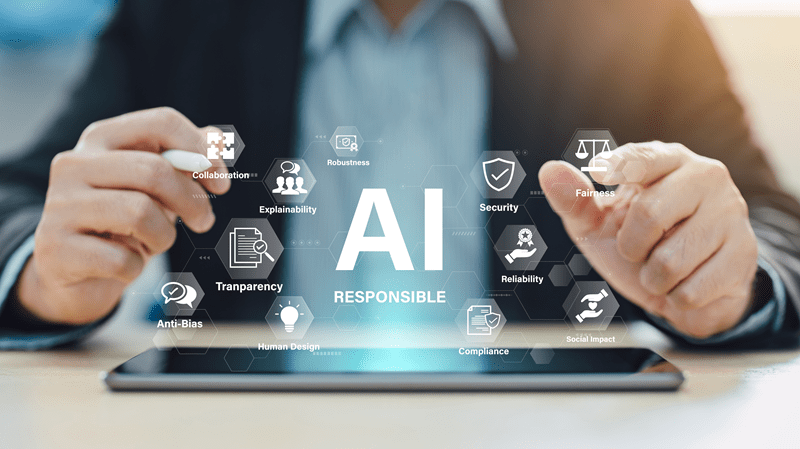

There are tools and platforms that can learn and make decisions like humans by following fair rules. This is the way responsible AI works. It is a process of creating AI systems that can offer proper response with fairness and safety. Responsible is not solely focused on speed, instead it is focused on create secure online environment.

In this blog, you will learn how responsible AI keeps humans in control and makes sure that technology helps people instead of replacing them.

Responsible AI means building and using AI systems that respect people and their values.

When AI learns, it uses data. If that data is wrong or biased, the results will also be wrong. Responsible AI tries to fix that. It checks that the system is fair, clear, and safe.

It also means that AI decisions should be easy to understand. If a system gives a result, there should be a reason that people can see. It’s not magic — it’s logic.

Responsible AI is not just about smart machines. It’s about creating technology that people can trust. It keeps human values in every part of the process.

Top Pick: The Rise of Edge AI Trends 2025 for Speed and Privacy

There are some main ideas that guide responsible AI. These ideas make AI systems fair, clear, and reliable.

AI should treat everyone the same. It should not treat people differently because of gender, race, or background.

Sometimes AI can be unfair because the data it learns from is unfair. To fix that, teams have to check and clean the data often.

When AI gives fair results, people start to trust it more. Fairness is the first step toward real responsibility.

Transparency will clearly show people how the AI works. This will make it easier for people to understand that the solutions are not biased.

Explainability is also essential because it will explain people how AI learned about something and the steps it followed to do so. It helps users see that there is logic behind the results.

When things are open and clear, people feel safe using the system. It builds trust naturally.

AI cannot work alone. There should always be a human who checks what it does.

Accountability means someone is responsible if the AI makes a mistake. AI should never make big decisions without human review.

This helps keep the system safe and fair. Humans understand emotions and context. Machines don’t. This is the reason oversight matters.

Responsible AI always protects personal data. It keeps information safe and does not use it without permission.

Data should be stored properly and protected from leaks or attacks. Security is very important because even small risks can cause harm.

When people know their data is safe, they trust the system more. Privacy and security go hand in hand with responsibility.

AI should work well under different conditions. It should give results that people can rely on.

That means testing systems often and fixing problems quickly. A reliable AI is one that behaves the same way every time.

When people can depend on a system, they use it with confidence.

Best practices are the steps that help teams make AI more responsible. They are simple actions that turn the idea into real work.

Before building anything, teams should set rules. These rules help them know what is right and wrong.

When the guidelines are clear, it allows everyone to focus on doing their work correctly. It also acts as a reminder that the AI tool or platform can impact the real-world.

The performance of an AI tool depends heavily on data. Therefore, it is essential to keep the data balanced, if not, there is a high chance that the AI will make unfair decisions.

Collecting data from different sources and groups will make the decision-making of the AI fairer. This is because diversity in data provides better and more honest results.

AI systems should not be left alone after launch. They need regular checks to make sure they still work properly.

Monitoring helps find small problems early. It also helps systems stay updated with new data and conditions.

People who build AI should know what responsibility means. Training helps them understand fairness, bias, and privacy.

When teams are aware, they make better choices. It also builds a strong culture of responsibility inside the company.

Transparency should start early, not after the system is built. Every step — from collecting data to testing — should be open.

Keeping records helps others understand how the system was made. It also makes it easier to fix issues later.

Here are a few simple ways these principles can work in real projects.

It is important to set the standards before building the system. This avoids confusion later and keeps work consistent.

Responsible AI is not just about tech experts. This is because it requires support from legal teams and social experts as well. The reason is that different views help in the identification of risks and make AI fairer.

Add tools that explain AI decisions in simple terms. People should know how the system reached a conclusion. This helps users to trust the system more.

It is extremely vital to always protect user data and never use or share it without permission. Always remember that data safety is the most essential pillar of responsible AI.

AI is changing rapidly because of which people developing it have to stay updated as well. Continuous learning helps teams update their systems to meet new ethical rules and standards.

Audits check if everything is working fairly. They help find bias or mistakes that might go unnoticed. Think of them as health checks for AI. They keep systems honest and balanced.

Everyone working with AI should take responsibility. It’s not about blaming. It’s about caring for the outcome. When responsibility becomes part of the culture, trust naturally grows.

Must Read: Best Customer Acquisition Strategies That Brands Must Know

Responsible AI is about doing what’s right with technology. It means keeping systems fair, safe, and understandable. When people and machines work together responsibly, both can create a better world. In the end, the smartest AI is the one that stays human at heart.

This content was created by AI